Introduction

Deploying DeepSeek-R1 on Windows allows you to leverage powerful AI capabilities locally, enabling tasks like logical reasoning, text generation, and data analysis. This guide walks you through the entire process, from installing necessary tools to hosting a local AI server. Whether you’re building a private knowledge base or testing AI models, this step-by-step tutorial ensures a smooth setup.

Table of Contents

- What Are DeepSeek-R1, Ollama, and AnythingLLM?

- Core Features and Use Cases

- Installing and Deploying DeepSeek-R1 on Windows

- Step 1: Install CUDA Toolkit (Optional for GPU Acceleration)

- Step 2: Install Ollama

- Step 3: Install Models via Ollama

- Step 4: Install and Configure AnythingLLM

- Step 5: Download and Configure Embedding Models

- Step 6: Upload Local Data to Build a Knowledge Base

- Hosting Ollama as a Server on Windows

- Configure Environment Variables for Remote Access

- Open Firewall Ports

- Verify Remote Access

- Optional: Public Access via Tunneling Tools

- Troubleshooting Common Issues

1. What Are DeepSeek-R1, Ollama, and AnythingLLM?

DeepSeek-R1

DeepSeek-R1 is an open-source large language model (LLM) designed for tasks like logical reasoning, text generation, and data analysis. It supports FP16 computation and CUDA acceleration, making it ideal for local deployment.

Key Features:

- Parameter sizes from 1.5B to 671B for various hardware capabilities.

- Low-latency responses for real-time applications.

- Exceptional performance in handling complex tasks, including Chinese text.

Ollama

Ollama simplifies the deployment of local LLMs by offering one-click downloads and management of models like DeepSeek-R1.

Key Features:

- Offline operation for privacy.

- Easy switching between multiple models.

- Customizable installation paths.

AnythingLLM

AnythingLLM integrates local LLMs with knowledge bases, enabling contextual Q&A and private data processing.

Key Features:

- Private knowledge base creation.

- Multi-workspace management.

- Contextual Q&A capabilities.

2. Core Features and Use Cases

Use Case 1: Enterprise Knowledge Base and Smart Customer Support

Upload enterprise documents (e.g., manuals, contracts) to AnythingLLM and use DeepSeek-R1 for precise Q&A.

Advantages:

- Local data storage for privacy.

- Multi-format file parsing.

Use Case 2: Research and Analysis

Researchers can process long texts (e.g., papers, reports) to generate summaries or extract key insights.

Examples:

- Legal document analysis.

- Medical literature processing.

Use Case 3: Personal Productivity

- Generate weekly reports from work logs.

- Offline multilingual translation.

- Build a private knowledge base for learning.

Use Case 4: Development and Testing

Developers can test different parameter sizes of DeepSeek-R1 for performance and compatibility.

3. Installing and Deploying DeepSeek-R1 on Windows

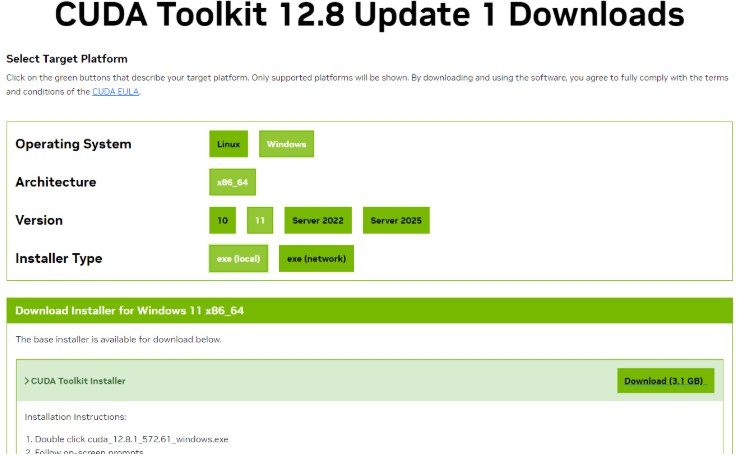

Step 1: Install CUDA Toolkit (Optional for GPU Acceleration)

- Visit the CUDA Toolkit Download Page.

- Select the appropriate version for your Windows system.

- Choose the “local” installation type for the best experience.

- Reboot your computer after installation.

Note: Skip this step if your system lacks a GPU.

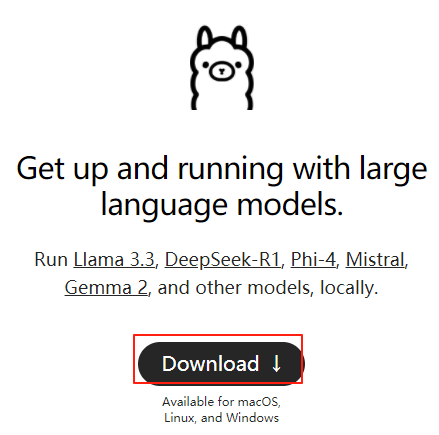

Step 2: Install Ollama

- Download the Ollama installer from the official website.

- Follow the installation prompts.

- Verify that Ollama is running in the background (check the system tray).

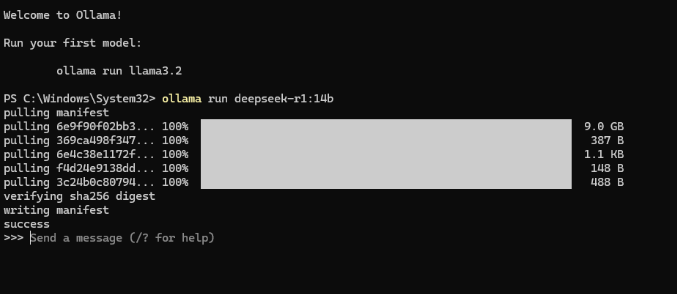

Step 3: Install Models via Ollama

- Visit the Ollama Model Library.

- Select a model based on your hardware:

- 1.5B for CPU-only setups.

- 14B+ for GPUs with 16GB+ VRAM.

- Run the installation command in PowerShell:

ollama run deepseek-r1:1.5b

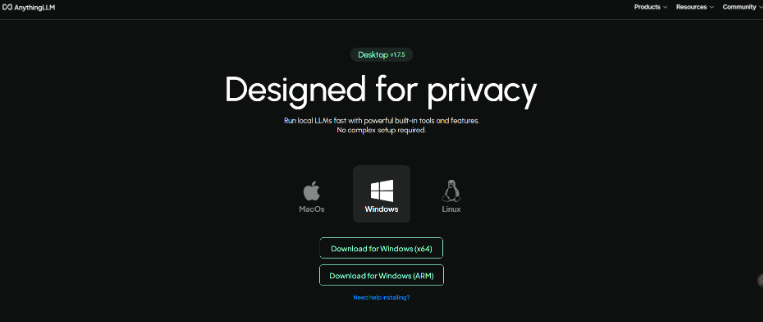

Step 4: Install and Configure AnythingLLM

- Download AnythingLLM from the official website.

- Set “Ollama” as the LLM provider in the settings.

- AnythingLLM will automatically detect deployed models like

deepseek-r1:14b.

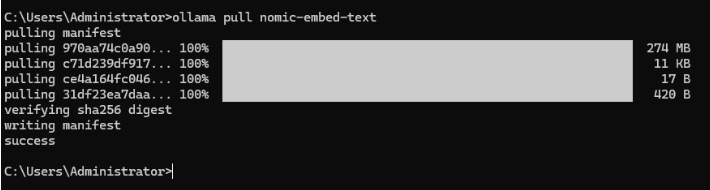

Step 5: Download and Configure Embedding Models

- Open a command-line tool and run:

ollama pull nomic-embed-text - Configure the embedding model in AnythingLLM settings and save changes.

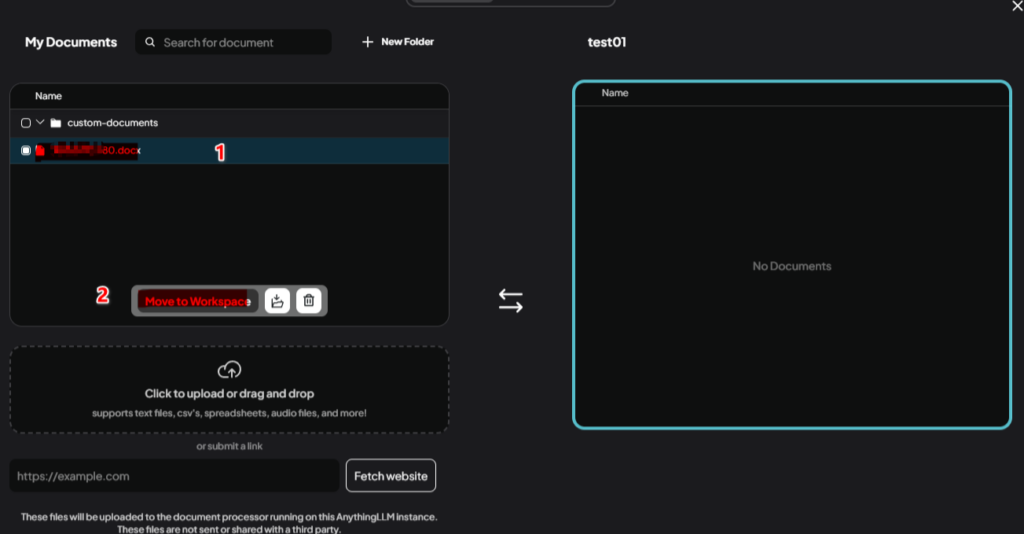

Step 6: Upload Local Data to Build a Knowledge Base

- Navigate to the workspace page in AnythingLLM.

- Click “Upload” and choose one of the following:

- Directly upload files.

- Connect to a database.

- The system will vectorize the data for contextual Q&A.

4. Hosting Ollama as a Server on Windows

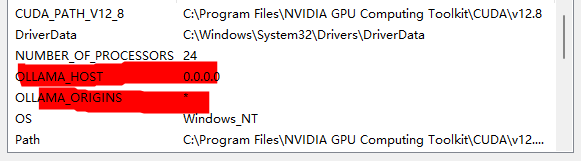

Configure Environment Variables for Remote Access

- Open System Properties → Environment Variables.

- Add the following variables:

OLLAMA_HOST=0.0.0.0OLLAMA_ORIGINS=*(optional for cross-origin access).

- Restart the Ollama service or your system.

Open Firewall Ports

Run the following command as an administrator:

netsh advfirewall firewall add rule name="Ollama" dir=in action=allow protocol=TCP localport=11434

#verify the port

netsh advfirewall firewall show rule name="Ollama"Verify Remote Access

- Check your local IP address with

ipconfig. - Test access from another device:

curl http://[Windows Host IP]:11434/api/tags

Optional: Public Access via Tunneling Tools

Use ngrok to expose your server:

ngrok http 11434

5. Troubleshooting Common Issues

Server Connection Failed

- Ensure

OLLAMA_HOSTandOLLAMA_ORIGINSare configured correctly. - Restart the Ollama service.

Port Conflict

- Check for port conflicts with:

netstat -ano | findstr 11434

Memory Issues

- Use smaller models (e.g., 1.5B) for low-memory systems.

This guide provides all the steps you need to deploy DeepSeek-R1 on Windows and host it as a local AI server. For additional resources, refer to the official documentation.