Introduction

One of the common challenges in AI image generation is achieving precise art direction. While prompts can guide the general style and content of an image, they often fall short when it comes to controlling specific composition details, such as screen direction or object placement.

In this article, we’ll explore how ControlNet can be used in ComfyUI workflows to overcome these limitations. By leveraging ControlNet models, you can guide the composition of your generated images using external inputs like line drawings or edge maps. This approach enables you to achieve a higher degree of control and precision in your image generation process.

Table of Contents

The Problem: Prompt Limitations in Composition

Let’s consider an example: generating an image of a dog facing screen right. Even with a carefully crafted prompt specifying “facing screen right,” the image generated by the Juggernaut XL diffusion model fails to meet this requirement. Instead of facing screen right, the dog faces screen left.

This outcome highlights a key limitation: diffusion models and text encoders often struggle to understand spatial or directional prompts. The only alternative would be to randomly try different seeds until you land on the desired composition—a time-consuming and inefficient process.

Introducing ControlNet

ControlNet provides a solution by introducing external image-based conditioning into the workflow. It works by analyzing a reference image and applying its structure or composition to the generated output.

ControlNet models are designed to work with specific diffusion models. For this example, we’ll use the controlnet-scribble-sdxl-1.0 model, which is compatible with the SDXL refined diffusion model. The Scribble ControlNet accepts simple line drawings as input, making it ideal for rough composition guidance.

Setting Up ControlNet in ComfyUI

Step 1: Download and Install the ControlNet Model

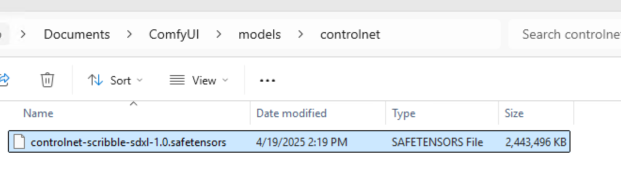

- Download the controlnet-scribble-sdxl-1.0 model from xinsir/controlnet-scribble-sdxl-1.0 at main, the original name is diffusion_pytorch_model.safetensors.

- Place the model file in the following directory:

/models/controlnetand rename it to controlnet-scribble-sdxl-1.0.safetensors, you will need to restart the comfyui to load the model file.

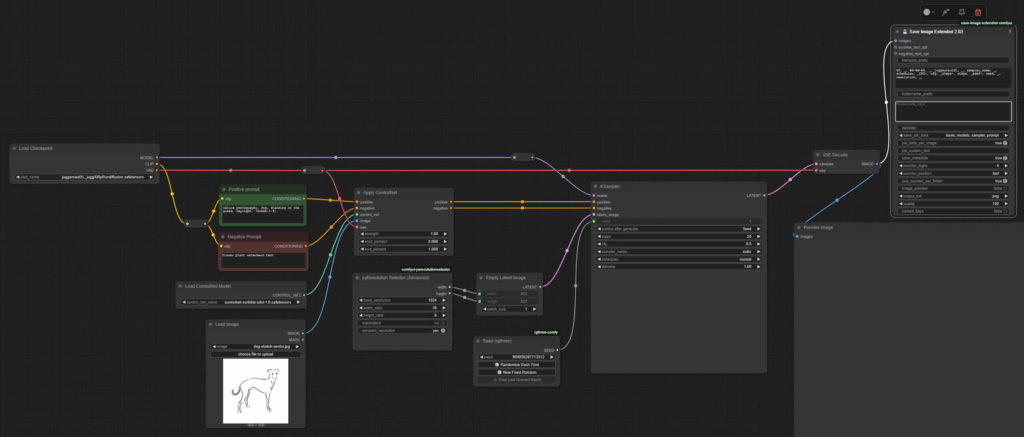

Step 2: Build the Workflow

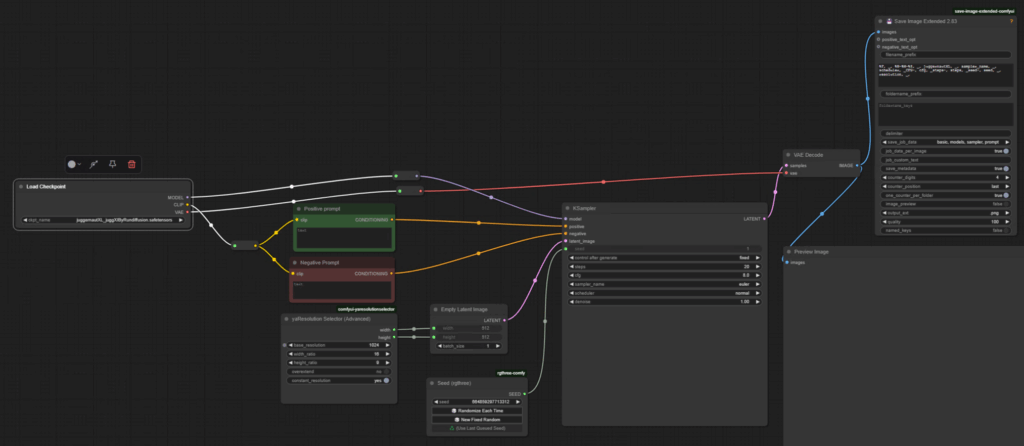

In ComfyUI, you’ll need to create a workflow that integrates the ControlNet model into your image generation process.

Original model:

Follow these steps:

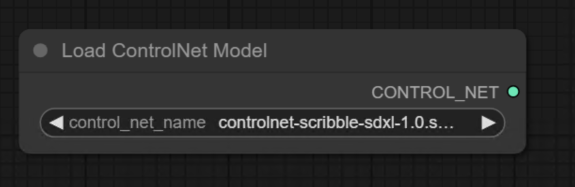

1. Load the ControlNet Model

- Add a Load ControlNet Model node.

- Select the controlnet-scribble-sdxl-1.0 model from the list.

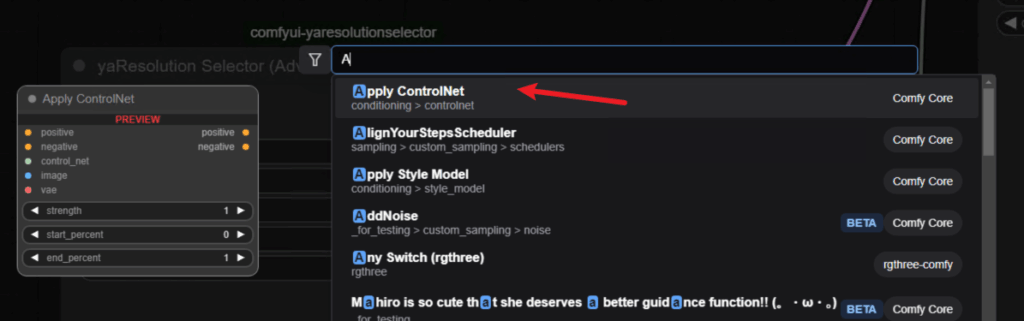

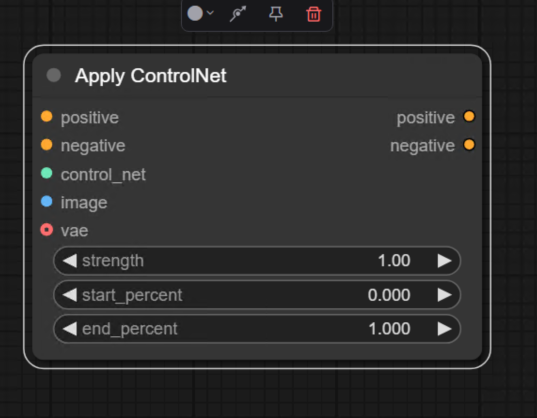

2. Apply ControlNet

- Add an Apply ControlNet node.

- Disconnect the conditioning from the KSampler node and route it through the Apply ControlNet node instead.

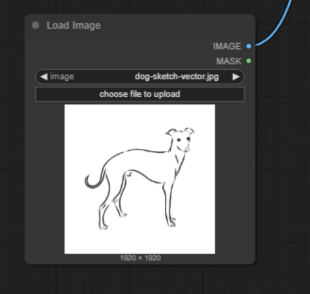

3. Load the Input Image

- Add a Load Image node.

- Upload your line drawing (e.g., a simple sketch of the desired composition).

- Connect the image to the Apply ControlNet node.

4. Complete Connections

- Connect the ControlNet model to the Apply ControlNet node.

- Optionally, connect the VAE to the Apply ControlNet node for completeness (though it won’t affect the results in this case).

Step 3: Generate the Image

Once your workflow is set up, queue the workflow to generate the image. The ControlNet will guide the composition based on the uploaded line drawing.

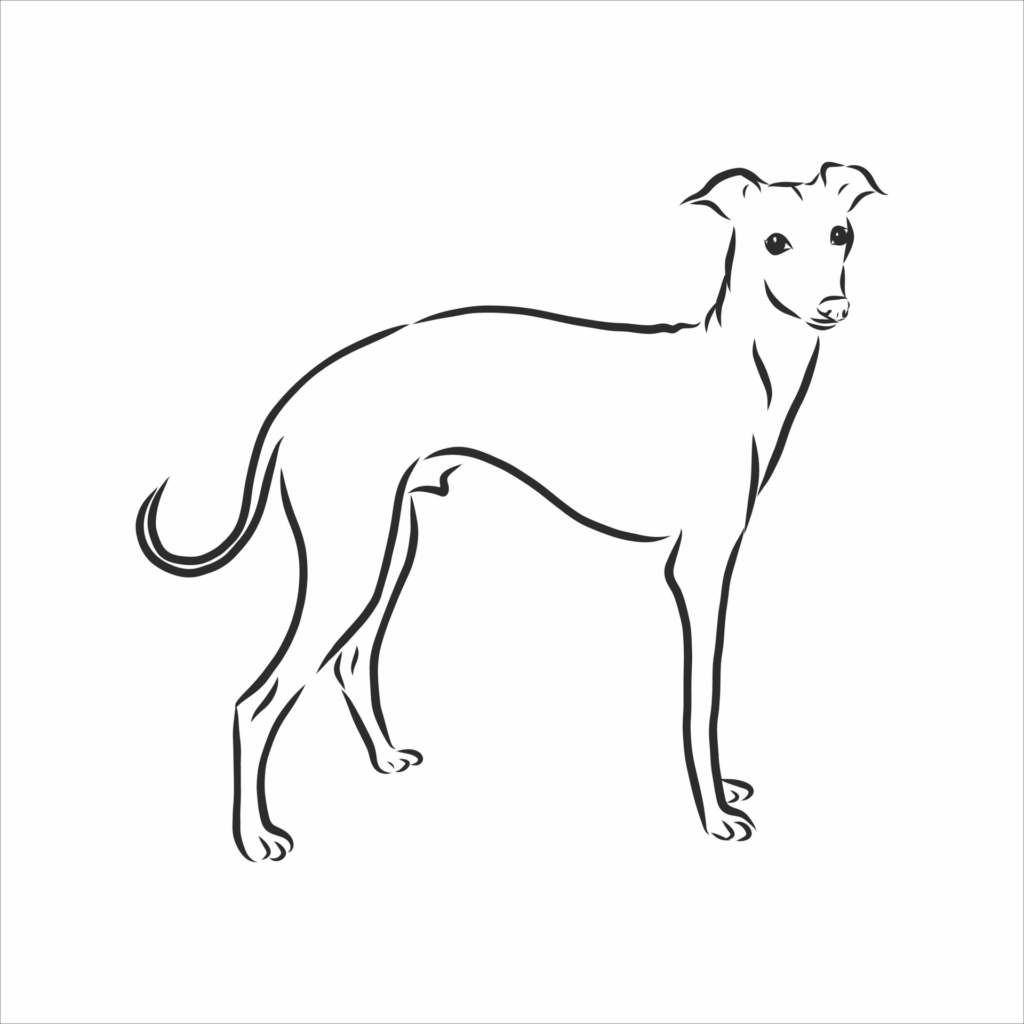

Original Sketch:

Prompt I am using: nature photography, dog, standing on the grass, daylight, (bokeh:1.5)

Output:

Results and Refinements

Initial Output

Using the ControlNet Scribble model, the generated dog now follows the correct composition. However, the influence of the ControlNet is set to maximum by default, which can lead to unnatural results if the input drawing is inaccurate or overly simplistic.

For example, if the uploaded sketch lacks anatomical accuracy, the generated image may look artificial or distorted.

Balancing ControlNet Influence

To address this, you can adjust the ControlNet influence parameters to strike a balance between the input drawing and the generative model’s natural refinement. This allows the model to fill in missing details while still adhering to the desired composition.

Types of ControlNet Models

ControlNet offers several variations, each tailored to specific use cases:

- Scribble ControlNet: Accepts simple line drawings for rough composition guidance.

- Canny ControlNet: Extracts edge details from an input image to guide composition.

- Depth ControlNet: Analyzes depth information to create images with realistic spatial relationships.

Depending on your needs, you can choose the appropriate ControlNet model to guide your workflow.

Practical Applications

ControlNet is particularly useful for:

- Precise Object Placement: Ensuring objects appear in specific locations or orientations.

- Custom Compositions: Achieving unique layouts that are difficult to describe using text prompts alone.

- Artistic Direction: Combining external sketches or references with generative AI for creative outputs.

Conclusion

Using ControlNet in ComfyUI workflows allows for greater control over image composition, addressing the limitations of prompt-based generation. By integrating external inputs like line drawings, you can guide the placement and orientation of objects with precision.

In the next step, experiment with balancing ControlNet influence to achieve naturalistic and visually appealing results. Whether you’re working on artistic projects or specific design tasks, ControlNet opens up new possibilities for creative direction in AI image generation.