FLUX, a cutting-edge family of AI models developed by Black Forest Labs, is gaining attention for its versatility and performance. In this article, we’ll focus on FLUX.1 Schnell, a distilled version of the FLUX model, and walk through its workflow, including setup, configuration, and optimization. If you’re working with limited video memory or exploring advanced AI workflows, FLUX Schnell could be a game-changer.

Table of Contents

What is FLUX?

FLUX is a family of AI models designed for various applications, with three primary versions:

- FLUX Pro: A cloud-only, paid version with limited workflow customization. It supports basic prompting workflows.

- FLUX.1 Dev: A locally installable version for non-commercial use.

- FLUX.1 Schnell: A distilled model optimized for speed and memory efficiency. This version is ideal for users with hardware constraints or those looking for faster performance without compromising quality.

In this guide, we’ll dive into FLUX.1 Schnell, covering its unique features and how to set it up effectively.

Why Choose FLUX.1 Schnell?

FLUX.1 Schnell is a distilled version of the model, similar to Stable Diffusion 3.5 Large Turbo. Distilled models are streamlined for faster downloads, reduced memory usage, and quicker loading times. However, FLUX remains a large model, so users with limited video memory should consider downloading the quantized version from the Comfy-Org Hugging Face page.

Key Features of FLUX.1 Schnell:

- Quantized Model: The FP8 Safetensors version (floating point 8) is optimized for better memory management.

- Integrated Text Encoders: The checkpoint file includes both CLIP and T5 text encoders, improving prompt adherence and natural language processing.

- Distilled Workflow: FLUX Schnell uses a CFG (Classifier-Free Guidance) scale of 1 and does not support negative prompts, simplifying its configuration.

Setting Up FLUX.1 Schnell in Comfy UI

Follow these steps to configure FLUX.1 Schnell for optimal performance:

1. Download the Model

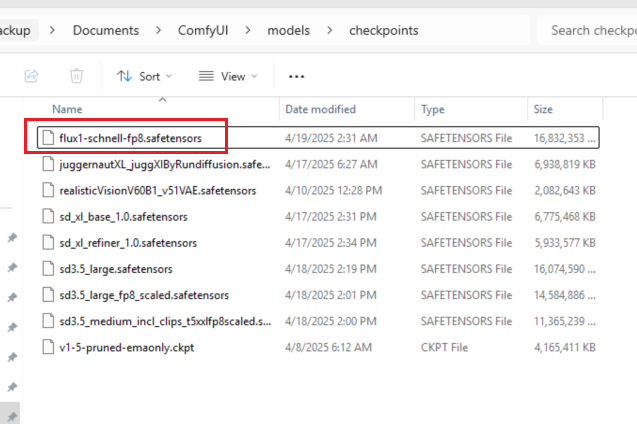

- Visit the Comfy-Org Hugging Face page and download the FLUX.1 Schnell FP8 Safetensors file. This version is quantized for improved video memory compatibility. After downloading the model file, place it under C:\Users\<username>\Documents\ComfyUI\models\checkpoints.

2. Prepare the Prompt

- FLUX uses both CLIP and T5 text encoders, which makes it highly effective for natural language prompts. Prepare a verbose prompt for better results.

- Note: FLUX Schnell does not support negative prompts. If you attempt to use one, it will result in errors or unexpected behavior.

3. Handle Negative Inputs

Since FLUX Schnell does not support negative prompts, you’ll need to zero out the conditioning for the negative input. Here’s how:

- Create a Conditioning Zero Out node.

- Connect it between the CLIP text encoder node and the negative input of the sampler.

- This ensures no confusion arises from an empty negative prompt.

4. Adjust Sampler Settings

- Set the steps to 4 for optimal speed and performance.

- Set the CFG scale to 1, as required by the distilled model.

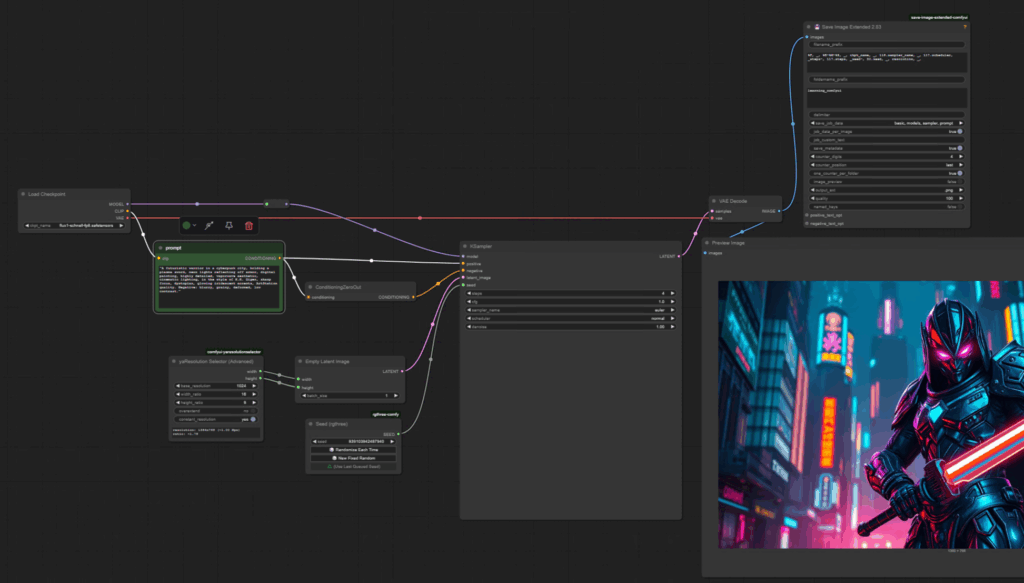

Here’s an example configuration:

Sampler Node Settings:

- Steps: 4

- CFG Scale: 1

Running the Workflow

Once the model is loaded, the generation process is remarkably fast, requiring only four steps. While the initial model loading may take some time, the streamlined workflow ensures efficient performance thereafter.

Below is some example output:

Prompt: 4k photo, high quality, in a European forest

Prompt:

a futuristic cityscape with neon lights and flying cars

Common Issues and Solutions

1. Model Loading Time

FLUX.1 Schnell is a large model, so initial loading may take longer, especially on systems with limited resources. Using the quantized FP8 version significantly reduces this issue.

2. Negative Prompt Confusion

If you’re accustomed to using negative prompts, ensure you zero out the conditioning as described above. This prevents errors and maintains a clean workflow.

Conclusion

FLUX.1 Schnell offers a powerful yet efficient solution for AI workflows, particularly for users with hardware constraints. Its integration of CLIP and T5 text encoders ensures superior prompt adherence, while the distilled architecture delivers faster processing. By following the steps outlined above, you can optimize FLUX.1 Schnell for your specific needs.

For more details, refer to the official FLUX documentation or the Comfy-Org Hugging Face page.